mirror of

https://github.com/201206030/novel-plus.git

synced 2025-07-01 07:16:39 +00:00

Compare commits

14 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 3849a9b86f | |||

| 71b9d1d916 | |||

| 4b9dbe969c | |||

| 2136f7490f | |||

| 3586ffbc0a | |||

| f78a2a36cf | |||

| a8219253e9 | |||

| 5c35f7af0a | |||

| d55e1a3e22 | |||

| 21a6a49ce9 | |||

| 3735023cef | |||

| 89992dc781 | |||

| 976db9420e | |||

| e33db86081 |

@ -61,9 +61,9 @@ novel-plus -- 父工程

|

||||

|

||||

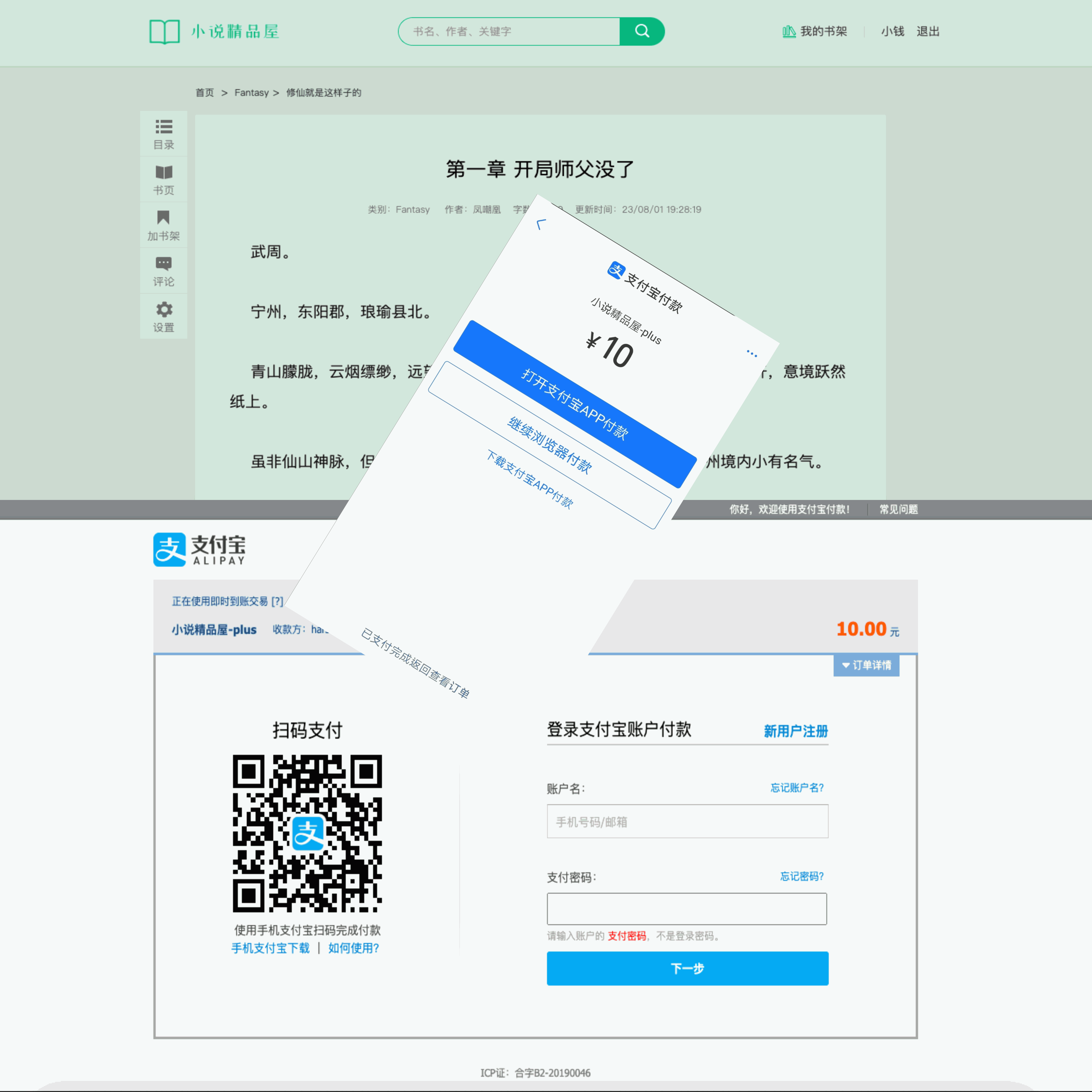

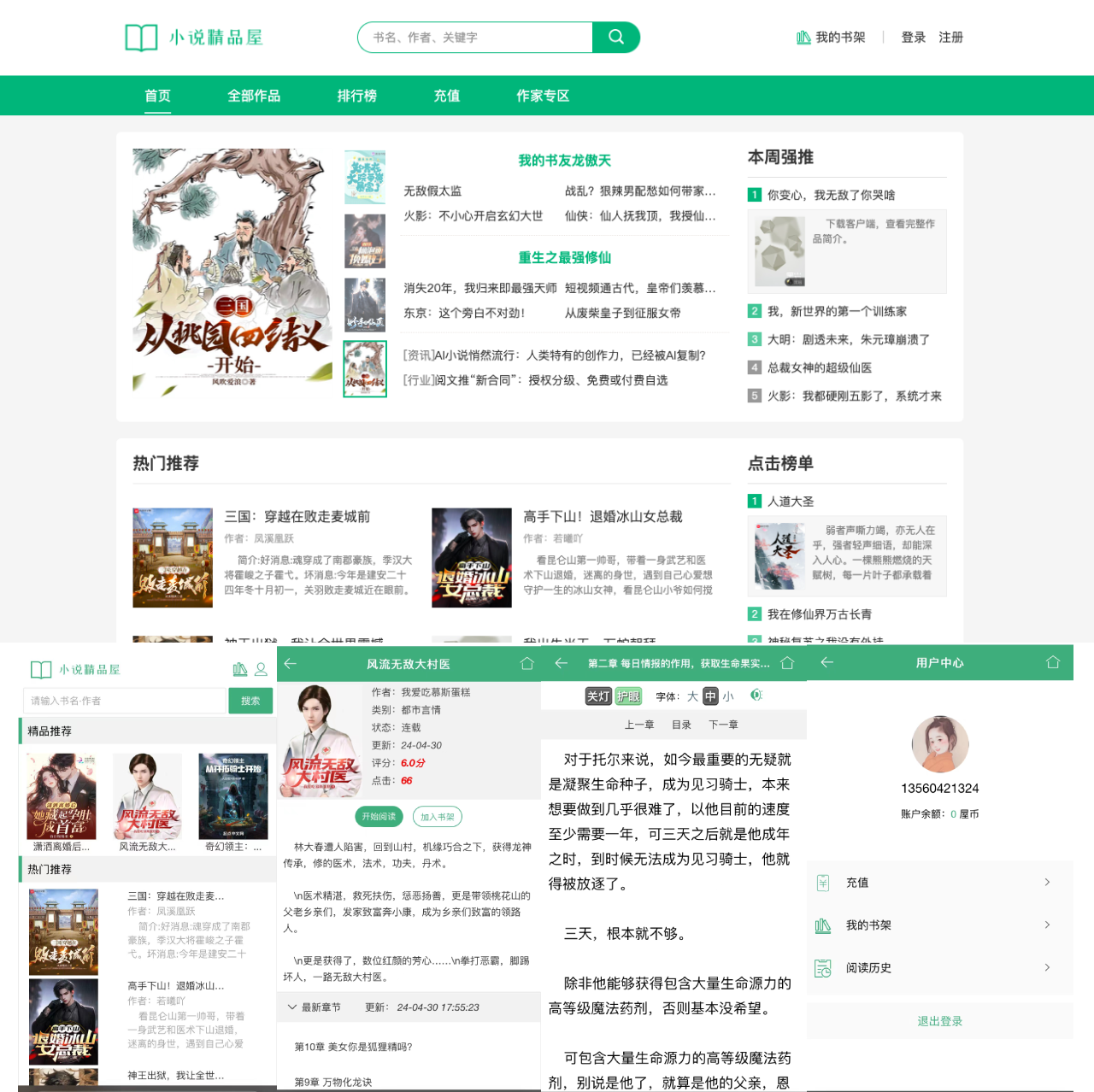

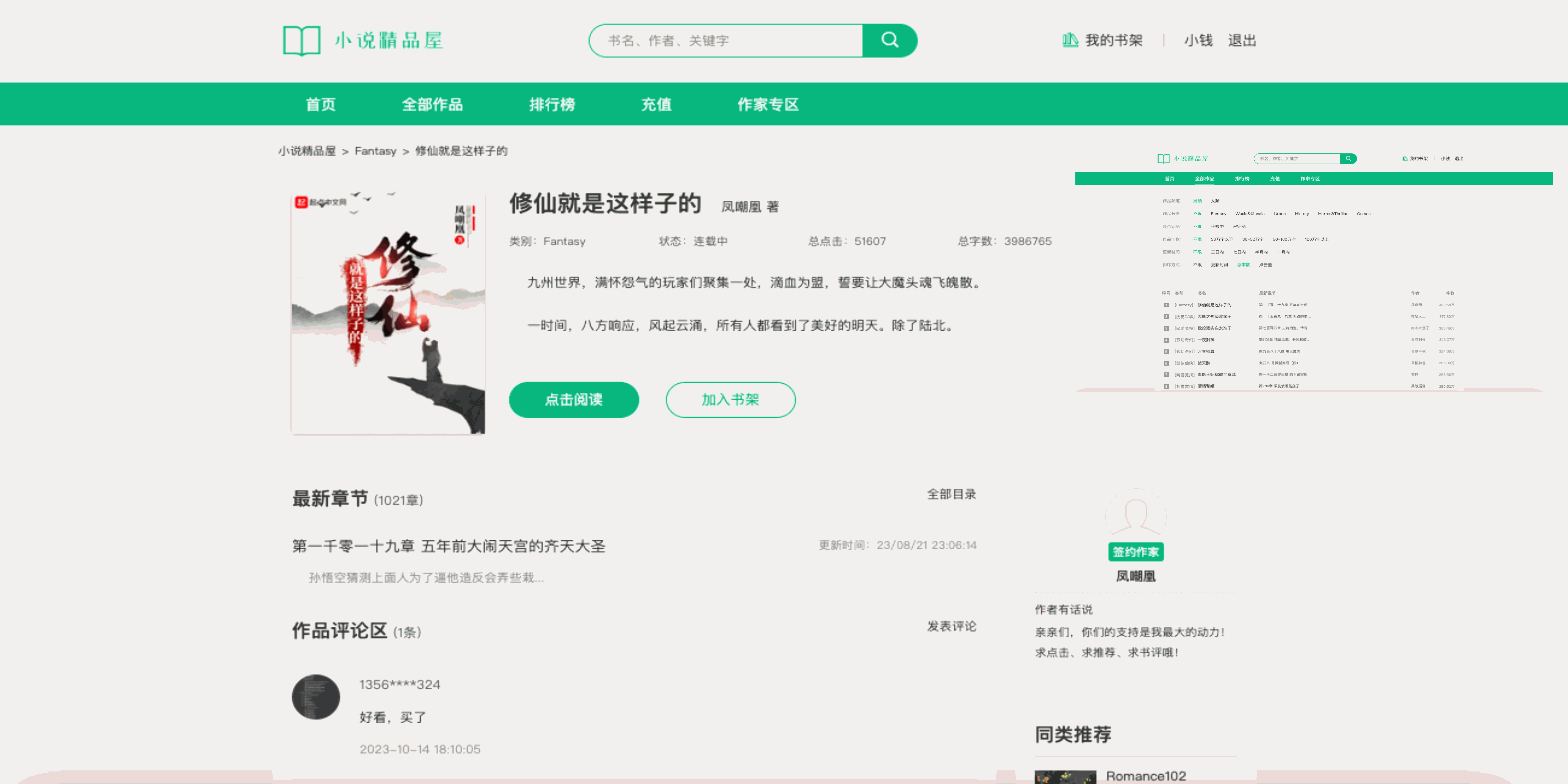

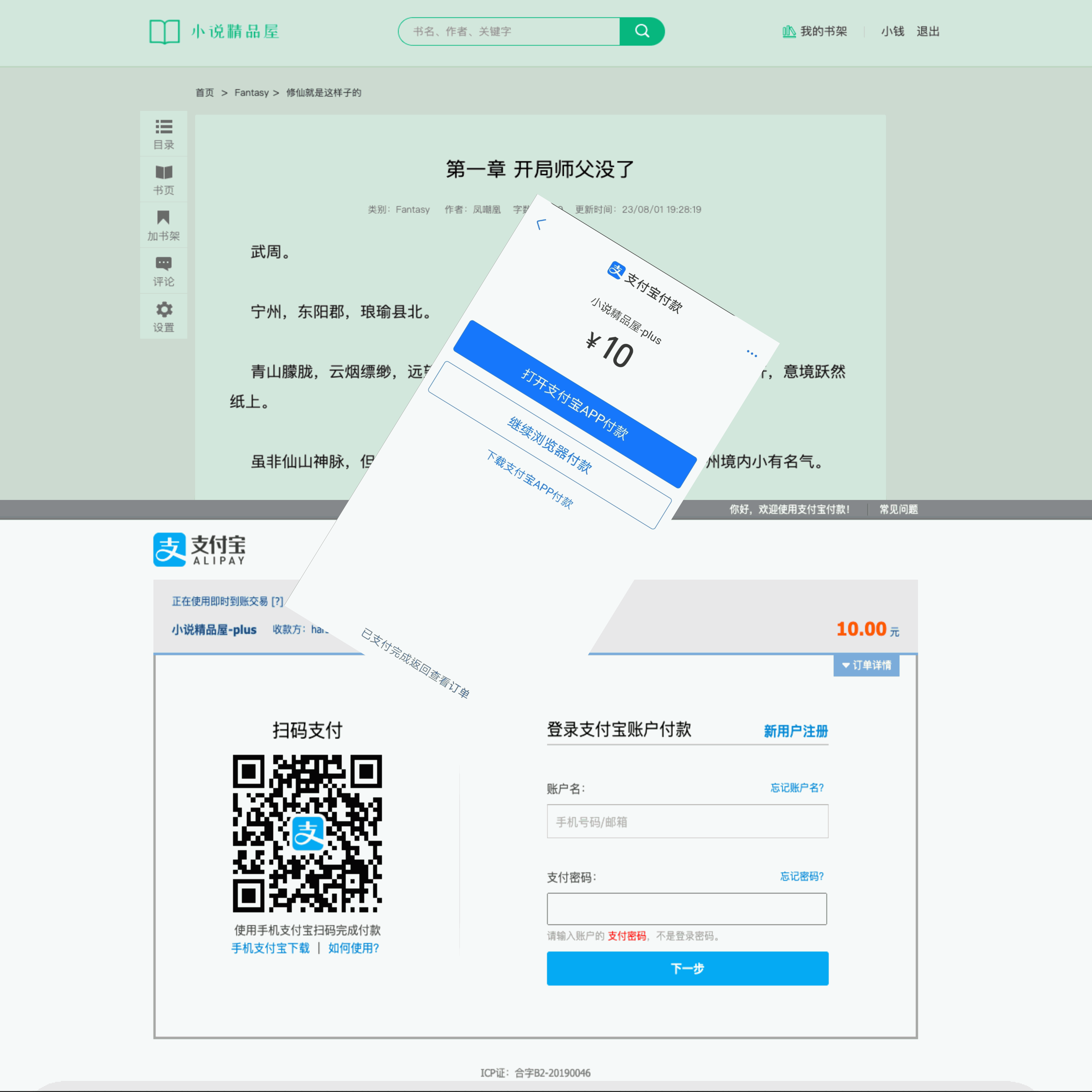

### 绿色主题模版

|

||||

|

||||

[](https://youdoc.gitee.io/resource/images/os/novel-plus/green.png)

|

||||

[](https://youdoc.gitee.io/resource/images/os/novel-plus/green3.png)

|

||||

[](https://youdoc.gitee.io/resource/images/os/novel-plus/green2.png)

|

||||

[](https://www.xxyopen.com/images/green_novel.png)

|

||||

[](https://www.xxyopen.com/images/resource/os/novel-plus/green3.png)

|

||||

[](https://www.xxyopen.com/images/resource/os/novel-plus/green2.png)

|

||||

|

||||

## 演示视频

|

||||

|

||||

|

||||

@ -1,7 +1,5 @@

|

||||

CREATE

|

||||

database if NOT EXISTS `novel_plus` default character set utf8mb4 collate utf8mb4_unicode_ci;

|

||||

use

|

||||

`novel_plus`;

|

||||

CREATE database if NOT EXISTS `novel_plus` default character set utf8mb4 collate utf8mb4_unicode_ci;

|

||||

use `novel_plus`;

|

||||

|

||||

SET NAMES utf8mb4;

|

||||

|

||||

@ -3105,4 +3103,42 @@ where id = 16;

|

||||

update website_info

|

||||

set logo = '/images/logo.png',

|

||||

logo_dark='/images/logo.png'

|

||||

where id = 1;

|

||||

where id = 1;

|

||||

|

||||

|

||||

INSERT INTO crawl_source (source_name, crawl_rule, source_status, create_time, update_time)

|

||||

VALUES ('香书小说网', '{

|

||||

"bookListUrl": "http://www.xbiqugu.net/fenlei/{catId}_{page}.html",

|

||||

"catIdRule": {

|

||||

"catId1": "1",

|

||||

"catId2": "2",

|

||||

"catId3": "3",

|

||||

"catId4": "4",

|

||||

"catId5": "6",

|

||||

"catId6": "5"

|

||||

},

|

||||

"bookIdPatten": "<a\\\\s+href=\\"http://www.xbiqugu.net/(\\\\d+/\\\\d+)/\\"\\\\s+target=\\"_blank\\">",

|

||||

"pagePatten": "<em\\\\s+id=\\"pagestats\\">(\\\\d+)/\\\\d+</em>",

|

||||

"totalPagePatten": "<em\\\\s+id=\\"pagestats\\">\\\\d+/(\\\\d+)</em>",

|

||||

"bookDetailUrl": "http://www.xbiqugu.net/{bookId}/",

|

||||

"bookNamePatten": "<h1>([^/]+)</h1>",

|

||||

"authorNamePatten": "者:([^/]+)</p>",

|

||||

"picUrlPatten": "src=\\"(http://www.xbiqugu.net/files/article/image/\\\\d+/\\\\d+/\\\\d+s\\\\.jpg)\\"",

|

||||

"bookStatusRule": {},

|

||||

"descStart": "<div id=\\"intro\\">",

|

||||

"descEnd": "</div>",

|

||||

"upadateTimePatten": "<p>最后更新:(\\\\d+-\\\\d+-\\\\d+\\\\s\\\\d+:\\\\d+:\\\\d+)</p>",

|

||||

"upadateTimeFormatPatten": "yyyy-MM-dd HH:mm:ss",

|

||||

"bookIndexUrl": "http://www.xbiqugu.net/{bookId}/",

|

||||

"indexIdPatten": "<a\\\\s+href=''/\\\\d+/\\\\d+/(\\\\d+)\\\\.html''\\\\s+>[^/]+</a>",

|

||||

"indexNamePatten": "<a\\\\s+href=''/\\\\d+/\\\\d+/\\\\d+\\\\.html''\\\\s+>([^/]+)</a>",

|

||||

"bookContentUrl": "http://www.xbiqugu.net/{bookId}/{indexId}.html",

|

||||

"contentStart": "<div id=\\"content\\">",

|

||||

"contentEnd": "<p>",

|

||||

"filterContent":"<div\\\\s+id=\\"content_tip\\">\\\\s*<b>([^/]+)</b>\\\\s*</div>"

|

||||

}', 0, '2024-06-01 10:11:39', '2024-06-01 10:11:39');

|

||||

|

||||

|

||||

update crawl_source

|

||||

set crawl_rule = replace(crawl_rule, 'ibiquzw.org', 'ibiqugu.net')

|

||||

where id = 16;

|

||||

@ -5,7 +5,7 @@

|

||||

|

||||

<groupId>com.java2nb</groupId>

|

||||

<artifactId>novel-admin</artifactId>

|

||||

<version>4.3.0</version>

|

||||

<version>4.4.0</version>

|

||||

<packaging>jar</packaging>

|

||||

|

||||

<name>novel-admin</name>

|

||||

|

||||

@ -5,7 +5,7 @@

|

||||

<parent>

|

||||

<artifactId>novel</artifactId>

|

||||

<groupId>com.java2nb</groupId>

|

||||

<version>4.3.0</version>

|

||||

<version>4.4.0</version>

|

||||

</parent>

|

||||

<modelVersion>4.0.0</modelVersion>

|

||||

|

||||

|

||||

@ -14,7 +14,10 @@ import org.springframework.http.ResponseEntity;

|

||||

|

||||

import javax.imageio.ImageIO;

|

||||

import java.awt.image.BufferedImage;

|

||||

import java.io.*;

|

||||

import java.io.File;

|

||||

import java.io.FileOutputStream;

|

||||

import java.io.InputStream;

|

||||

import java.io.OutputStream;

|

||||

import java.util.Date;

|

||||

import java.util.Objects;

|

||||

|

||||

@ -37,10 +40,13 @@ public class FileUtil {

|

||||

//本地图片保存

|

||||

HttpHeaders headers = new HttpHeaders();

|

||||

HttpEntity<String> requestEntity = new HttpEntity<>(null, headers);

|

||||

ResponseEntity<Resource> resEntity = RestTemplateUtil.getInstance(Charsets.ISO_8859_1.name()).exchange(picSrc, HttpMethod.GET, requestEntity, Resource.class);

|

||||

ResponseEntity<Resource> resEntity = RestTemplates.newInstance(Charsets.ISO_8859_1.name())

|

||||

.exchange(picSrc, HttpMethod.GET, requestEntity, Resource.class);

|

||||

input = Objects.requireNonNull(resEntity.getBody()).getInputStream();

|

||||

Date currentDate = new Date();

|

||||

picSrc = visitPrefix + DateUtils.formatDate(currentDate, "yyyy") + "/" + DateUtils.formatDate(currentDate, "MM") + "/" + DateUtils.formatDate(currentDate, "dd") + "/"

|

||||

picSrc =

|

||||

visitPrefix + DateUtils.formatDate(currentDate, "yyyy") + "/" + DateUtils.formatDate(currentDate, "MM")

|

||||

+ "/" + DateUtils.formatDate(currentDate, "dd") + "/"

|

||||

+ UUIDUtil.getUUID32()

|

||||

+ picSrc.substring(picSrc.lastIndexOf("."));

|

||||

File picFile = new File(picSavePath + picSrc);

|

||||

@ -67,7 +73,6 @@ public class FileUtil {

|

||||

closeStream(input, out);

|

||||

}

|

||||

|

||||

|

||||

return picSrc;

|

||||

}

|

||||

|

||||

|

||||

@ -1,38 +1,24 @@

|

||||

package com.java2nb.novel.core.utils;

|

||||

|

||||

import lombok.extern.slf4j.Slf4j;

|

||||

import org.springframework.http.*;

|

||||

import org.springframework.web.client.RestTemplate;

|

||||

|

||||

/**

|

||||

* @author Administrator

|

||||

*/

|

||||

@Slf4j

|

||||

public class HttpUtil {

|

||||

|

||||

private static RestTemplate restTemplate = RestTemplateUtil.getInstance("utf-8");

|

||||

|

||||

|

||||

public static String getByHttpClient(String url) {

|

||||

try {

|

||||

|

||||

ResponseEntity<String> forEntity = restTemplate.getForEntity(url, String.class);

|

||||

if (forEntity.getStatusCode() == HttpStatus.OK) {

|

||||

return forEntity.getBody();

|

||||

} else {

|

||||

return null;

|

||||

}

|

||||

} catch (Exception e) {

|

||||

e.printStackTrace();

|

||||

return null;

|

||||

}

|

||||

}

|

||||

private static final RestTemplate REST_TEMPLATE = RestTemplates.newInstance("utf-8");

|

||||

|

||||

public static String getByHttpClientWithChrome(String url) {

|

||||

try {

|

||||

|

||||

HttpHeaders headers = new HttpHeaders();

|

||||

headers.add("user-agent","Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.67 Safari/537.36");

|

||||

headers.add("user-agent",

|

||||

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.67 Safari/537.36");

|

||||

HttpEntity<String> requestEntity = new HttpEntity<>(null, headers);

|

||||

ResponseEntity<String> forEntity = restTemplate.exchange(url.toString(), HttpMethod.GET, requestEntity, String.class);

|

||||

ResponseEntity<String> forEntity = REST_TEMPLATE.exchange(url, HttpMethod.GET, requestEntity, String.class);

|

||||

|

||||

if (forEntity.getStatusCode() == HttpStatus.OK) {

|

||||

return forEntity.getBody();

|

||||

@ -40,8 +26,9 @@ public class HttpUtil {

|

||||

return null;

|

||||

}

|

||||

} catch (Exception e) {

|

||||

e.printStackTrace();

|

||||

log.error(e.getMessage(), e);

|

||||

return null;

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

@ -26,16 +26,16 @@ import java.util.List;

|

||||

import java.util.Objects;

|

||||

|

||||

@Component

|

||||

public class RestTemplateUtil {

|

||||

public class RestTemplates {

|

||||

|

||||

private static HttpProxyProperties httpProxyProperties;

|

||||

|

||||

RestTemplateUtil(HttpProxyProperties properties) {

|

||||

RestTemplates(HttpProxyProperties properties) {

|

||||

httpProxyProperties = properties;

|

||||

}

|

||||

|

||||

@SneakyThrows

|

||||

public static RestTemplate getInstance(String charset) {

|

||||

public static RestTemplate newInstance(String charset) {

|

||||

|

||||

TrustStrategy acceptingTrustStrategy = (X509Certificate[] chain, String authType) -> true;

|

||||

|

||||

@ -5,7 +5,7 @@

|

||||

<parent>

|

||||

<artifactId>novel</artifactId>

|

||||

<groupId>com.java2nb</groupId>

|

||||

<version>4.3.0</version>

|

||||

<version>4.4.0</version>

|

||||

</parent>

|

||||

<modelVersion>4.0.0</modelVersion>

|

||||

|

||||

|

||||

@ -1,23 +1,23 @@

|

||||

package com.java2nb.novel.core.crawl;

|

||||

|

||||

import com.java2nb.novel.core.utils.HttpUtil;

|

||||

import com.java2nb.novel.core.utils.RandomBookInfoUtil;

|

||||

import com.java2nb.novel.core.utils.RestTemplateUtil;

|

||||

import com.java2nb.novel.core.utils.StringUtil;

|

||||

import com.java2nb.novel.entity.Book;

|

||||

import com.java2nb.novel.entity.BookContent;

|

||||

import com.java2nb.novel.entity.BookIndex;

|

||||

import com.java2nb.novel.utils.Constants;

|

||||

import com.java2nb.novel.utils.CrawlHttpClient;

|

||||

import io.github.xxyopen.util.IdWorker;

|

||||

import lombok.RequiredArgsConstructor;

|

||||

import lombok.SneakyThrows;

|

||||

import lombok.extern.slf4j.Slf4j;

|

||||

import org.apache.commons.lang3.StringUtils;

|

||||

import org.springframework.http.HttpStatus;

|

||||

import org.springframework.http.ResponseEntity;

|

||||

import org.springframework.web.client.RestTemplate;

|

||||

import org.springframework.stereotype.Component;

|

||||

|

||||

import java.text.SimpleDateFormat;

|

||||

import java.util.*;

|

||||

import java.util.ArrayList;

|

||||

import java.util.Date;

|

||||

import java.util.List;

|

||||

import java.util.Map;

|

||||

import java.util.regex.Matcher;

|

||||

import java.util.regex.Pattern;

|

||||

|

||||

@ -26,20 +26,19 @@ import java.util.regex.Pattern;

|

||||

*

|

||||

* @author Administrator

|

||||

*/

|

||||

@Slf4j

|

||||

@Component

|

||||

@RequiredArgsConstructor

|

||||

public class CrawlParser {

|

||||

|

||||

private static final IdWorker idWorker = IdWorker.INSTANCE;

|

||||

private final IdWorker ID_WORKER = IdWorker.INSTANCE;

|

||||

|

||||

private static final RestTemplate restTemplate = RestTemplateUtil.getInstance("utf-8");

|

||||

|

||||

private static final ThreadLocal<Integer> retryCount = new ThreadLocal<>();

|

||||

private final CrawlHttpClient crawlHttpClient;

|

||||

|

||||

@SneakyThrows

|

||||

public static void parseBook(RuleBean ruleBean, String bookId, CrawlBookHandler handler) {

|

||||

public void parseBook(RuleBean ruleBean, String bookId, CrawlBookHandler handler) {

|

||||

Book book = new Book();

|

||||

String bookDetailUrl = ruleBean.getBookDetailUrl().replace("{bookId}", bookId);

|

||||

String bookDetailHtml = getByHttpClientWithChrome(bookDetailUrl);

|

||||

String bookDetailHtml = crawlHttpClient.get(bookDetailUrl);

|

||||

if (bookDetailHtml != null) {

|

||||

Pattern bookNamePatten = PatternFactory.getPattern(ruleBean.getBookNamePatten());

|

||||

Matcher bookNameMatch = bookNamePatten.matcher(bookDetailHtml);

|

||||

@ -89,14 +88,15 @@ public class CrawlParser {

|

||||

}

|

||||

}

|

||||

|

||||

String desc = bookDetailHtml.substring(bookDetailHtml.indexOf(ruleBean.getDescStart()) + ruleBean.getDescStart().length());

|

||||

String desc = bookDetailHtml.substring(

|

||||

bookDetailHtml.indexOf(ruleBean.getDescStart()) + ruleBean.getDescStart().length());

|

||||

desc = desc.substring(0, desc.indexOf(ruleBean.getDescEnd()));

|

||||

//过滤掉简介中的特殊标签

|

||||

desc = desc.replaceAll("<a[^<]+</a>", "")

|

||||

.replaceAll("<font[^<]+</font>", "")

|

||||

.replaceAll("<p>\\s*</p>", "")

|

||||

.replaceAll("<p>", "")

|

||||

.replaceAll("</p>", "<br/>");

|

||||

.replaceAll("<font[^<]+</font>", "")

|

||||

.replaceAll("<p>\\s*</p>", "")

|

||||

.replaceAll("<p>", "")

|

||||

.replaceAll("</p>", "<br/>");

|

||||

//设置书籍简介

|

||||

book.setBookDesc(desc);

|

||||

if (StringUtils.isNotBlank(ruleBean.getStatusPatten())) {

|

||||

@ -112,14 +112,16 @@ public class CrawlParser {

|

||||

}

|

||||

}

|

||||

|

||||

if (StringUtils.isNotBlank(ruleBean.getUpadateTimePatten()) && StringUtils.isNotBlank(ruleBean.getUpadateTimeFormatPatten())) {

|

||||

if (StringUtils.isNotBlank(ruleBean.getUpadateTimePatten()) && StringUtils.isNotBlank(

|

||||

ruleBean.getUpadateTimeFormatPatten())) {

|

||||

Pattern updateTimePatten = PatternFactory.getPattern(ruleBean.getUpadateTimePatten());

|

||||

Matcher updateTimeMatch = updateTimePatten.matcher(bookDetailHtml);

|

||||

boolean isFindUpdateTime = updateTimeMatch.find();

|

||||

if (isFindUpdateTime) {

|

||||

String updateTime = updateTimeMatch.group(1);

|

||||

//设置更新时间

|

||||

book.setLastIndexUpdateTime(new SimpleDateFormat(ruleBean.getUpadateTimeFormatPatten()).parse(updateTime));

|

||||

book.setLastIndexUpdateTime(

|

||||

new SimpleDateFormat(ruleBean.getUpadateTimeFormatPatten()).parse(updateTime));

|

||||

|

||||

}

|

||||

}

|

||||

@ -141,7 +143,8 @@ public class CrawlParser {

|

||||

handler.handle(book);

|

||||

}

|

||||

|

||||

public static boolean parseBookIndexAndContent(String sourceBookId, Book book, RuleBean ruleBean, Map<Integer, BookIndex> existBookIndexMap, CrawlBookChapterHandler handler) {

|

||||

public boolean parseBookIndexAndContent(String sourceBookId, Book book, RuleBean ruleBean,

|

||||

Map<Integer, BookIndex> existBookIndexMap, CrawlBookChapterHandler handler) {

|

||||

|

||||

Date currentDate = new Date();

|

||||

|

||||

@ -149,11 +152,12 @@ public class CrawlParser {

|

||||

List<BookContent> contentList = new ArrayList<>();

|

||||

//读取目录

|

||||

String indexListUrl = ruleBean.getBookIndexUrl().replace("{bookId}", sourceBookId);

|

||||

String indexListHtml = getByHttpClientWithChrome(indexListUrl);

|

||||

String indexListHtml = crawlHttpClient.get(indexListUrl);

|

||||

|

||||

if (indexListHtml != null) {

|

||||

if (StringUtils.isNotBlank(ruleBean.getBookIndexStart())) {

|

||||

indexListHtml = indexListHtml.substring(indexListHtml.indexOf(ruleBean.getBookIndexStart()) + ruleBean.getBookIndexStart().length());

|

||||

indexListHtml = indexListHtml.substring(

|

||||

indexListHtml.indexOf(ruleBean.getBookIndexStart()) + ruleBean.getBookIndexStart().length());

|

||||

}

|

||||

|

||||

Pattern indexIdPatten = PatternFactory.getPattern(ruleBean.getIndexIdPatten());

|

||||

@ -174,14 +178,16 @@ public class CrawlParser {

|

||||

BookIndex hasIndex = existBookIndexMap.get(indexNum);

|

||||

String indexName = indexNameMatch.group(1);

|

||||

|

||||

if (hasIndex == null || !StringUtils.deleteWhitespace(hasIndex.getIndexName()).equals(StringUtils.deleteWhitespace(indexName))) {

|

||||

if (hasIndex == null || !StringUtils.deleteWhitespace(hasIndex.getIndexName())

|

||||

.equals(StringUtils.deleteWhitespace(indexName))) {

|

||||

|

||||

String sourceIndexId = indexIdMatch.group(1);

|

||||

String bookContentUrl = ruleBean.getBookContentUrl();

|

||||

int calStart = bookContentUrl.indexOf("{cal_");

|

||||

if (calStart != -1) {

|

||||

//内容页URL需要进行计算才能得到

|

||||

String calStr = bookContentUrl.substring(calStart, calStart + bookContentUrl.substring(calStart).indexOf("}"));

|

||||

String calStr = bookContentUrl.substring(calStart,

|

||||

calStart + bookContentUrl.substring(calStart).indexOf("}"));

|

||||

String[] calArr = calStr.split("_");

|

||||

int calType = Integer.parseInt(calArr[1]);

|

||||

if (calType == 1) {

|

||||

@ -206,13 +212,25 @@ public class CrawlParser {

|

||||

|

||||

}

|

||||

|

||||

String contentUrl = bookContentUrl.replace("{bookId}", sourceBookId).replace("{indexId}", sourceIndexId);

|

||||

String contentUrl = bookContentUrl.replace("{bookId}", sourceBookId)

|

||||

.replace("{indexId}", sourceIndexId);

|

||||

|

||||

//查询章节内容

|

||||

String contentHtml = getByHttpClientWithChrome(contentUrl);

|

||||

String contentHtml = crawlHttpClient.get(contentUrl);

|

||||

if (contentHtml != null && !contentHtml.contains("正在手打中")) {

|

||||

String content = contentHtml.substring(contentHtml.indexOf(ruleBean.getContentStart()) + ruleBean.getContentStart().length());

|

||||

String content = contentHtml.substring(

|

||||

contentHtml.indexOf(ruleBean.getContentStart()) + ruleBean.getContentStart().length());

|

||||

content = content.substring(0, content.indexOf(ruleBean.getContentEnd()));

|

||||

// 小说内容过滤

|

||||

String filterContent = ruleBean.getFilterContent();

|

||||

if (StringUtils.isNotBlank(filterContent)) {

|

||||

String[] filterRules = filterContent.replace("\r\n", "\n").split("\n");

|

||||

for (String filterRule : filterRules) {

|

||||

if (StringUtils.isNotBlank(filterRule)) {

|

||||

content = content.replaceAll(filterRule, "");

|

||||

}

|

||||

}

|

||||

}

|

||||

//插入章节目录和章节内容

|

||||

BookIndex bookIndex = new BookIndex();

|

||||

bookIndex.setIndexName(indexName);

|

||||

@ -235,7 +253,7 @@ public class CrawlParser {

|

||||

} else {

|

||||

//章节插入

|

||||

//设置目录和章节内容

|

||||

Long indexId = idWorker.nextId();

|

||||

Long indexId = ID_WORKER.nextId();

|

||||

bookIndex.setId(indexId);

|

||||

bookIndex.setBookId(book.getId());

|

||||

|

||||

@ -257,7 +275,6 @@ public class CrawlParser {

|

||||

isFindIndex = indexIdMatch.find() & indexNameMatch.find();

|

||||

}

|

||||

|

||||

|

||||

if (indexList.size() > 0) {

|

||||

//如果有爬到最新章节,则设置小说主表的最新章节信息

|

||||

//获取爬取到的最新章节

|

||||

@ -290,56 +307,4 @@ public class CrawlParser {

|

||||

return false;

|

||||

|

||||

}

|

||||

|

||||

|

||||

private static String getByHttpClient(String url) {

|

||||

try {

|

||||

ResponseEntity<String> forEntity = restTemplate.getForEntity(url, String.class);

|

||||

if (forEntity.getStatusCode() == HttpStatus.OK) {

|

||||

String body = forEntity.getBody();

|

||||

assert body != null;

|

||||

if (body.length() < Constants.INVALID_HTML_LENGTH) {

|

||||

return processErrorHttpResult(url);

|

||||

}

|

||||

//成功获得html内容

|

||||

return body;

|

||||

}

|

||||

} catch (Exception e) {

|

||||

e.printStackTrace();

|

||||

}

|

||||

return processErrorHttpResult(url);

|

||||

|

||||

}

|

||||

|

||||

private static String getByHttpClientWithChrome(String url) {

|

||||

try {

|

||||

|

||||

String body = HttpUtil.getByHttpClientWithChrome(url);

|

||||

if (body != null && body.length() < Constants.INVALID_HTML_LENGTH) {

|

||||

return processErrorHttpResult(url);

|

||||

}

|

||||

//成功获得html内容

|

||||

return body;

|

||||

} catch (Exception e) {

|

||||

e.printStackTrace();

|

||||

}

|

||||

return processErrorHttpResult(url);

|

||||

|

||||

}

|

||||

|

||||

@SneakyThrows

|

||||

private static String processErrorHttpResult(String url) {

|

||||

Integer count = retryCount.get();

|

||||

if (count == null) {

|

||||

count = 0;

|

||||

}

|

||||

if (count < Constants.HTTP_FAIL_RETRY_COUNT) {

|

||||

Thread.sleep(new Random().nextInt(10 * 1000));

|

||||

retryCount.set(++count);

|

||||

return getByHttpClient(url);

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

|

||||

}

|

||||

|

||||

@ -6,6 +6,7 @@ import java.util.Map;

|

||||

|

||||

/**

|

||||

* 爬虫解析规则bean

|

||||

*

|

||||

* @author Administrator

|

||||

*/

|

||||

@Data

|

||||

@ -13,17 +14,17 @@ public class RuleBean {

|

||||

|

||||

/**

|

||||

* 小说更新列表url

|

||||

* */

|

||||

*/

|

||||

private String updateBookListUrl;

|

||||

|

||||

/**

|

||||

* 分类列表页URL规则

|

||||

* */

|

||||

*/

|

||||

private String bookListUrl;

|

||||

|

||||

private Map<String,String> catIdRule;

|

||||

private Map<String, String> catIdRule;

|

||||

|

||||

private Map<String,Byte> bookStatusRule;

|

||||

private Map<String, Byte> bookStatusRule;

|

||||

|

||||

private String bookIdPatten;

|

||||

private String pagePatten;

|

||||

@ -51,5 +52,7 @@ public class RuleBean {

|

||||

|

||||

private String bookIndexStart;

|

||||

|

||||

private String filterContent;

|

||||

|

||||

|

||||

}

|

||||

|

||||

@ -1,10 +1,12 @@

|

||||

package com.java2nb.novel.core.listener;

|

||||

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import com.java2nb.novel.core.crawl.ChapterBean;

|

||||

import com.java2nb.novel.core.crawl.CrawlParser;

|

||||

import com.java2nb.novel.core.crawl.RuleBean;

|

||||

import com.java2nb.novel.entity.*;

|

||||

import com.java2nb.novel.entity.Book;

|

||||

import com.java2nb.novel.entity.BookIndex;

|

||||

import com.java2nb.novel.entity.CrawlSingleTask;

|

||||

import com.java2nb.novel.entity.CrawlSource;

|

||||

import com.java2nb.novel.service.BookService;

|

||||

import com.java2nb.novel.service.CrawlService;

|

||||

import com.java2nb.novel.utils.Constants;

|

||||

@ -33,6 +35,8 @@ public class StarterListener implements ServletContextListener {

|

||||

|

||||

private final CrawlService crawlService;

|

||||

|

||||

private final CrawlParser crawlParser;

|

||||

|

||||

@Value("${crawl.update.thread}")

|

||||

private int updateThreadCount;

|

||||

|

||||

@ -56,20 +60,24 @@ public class StarterListener implements ServletContextListener {

|

||||

CrawlSource source = crawlService.queryCrawlSource(needUpdateBook.getCrawlSourceId());

|

||||

RuleBean ruleBean = new ObjectMapper().readValue(source.getCrawlRule(), RuleBean.class);

|

||||

//解析小说基本信息

|

||||

CrawlParser.parseBook(ruleBean, needUpdateBook.getCrawlBookId(),book -> {

|

||||

crawlParser.parseBook(ruleBean, needUpdateBook.getCrawlBookId(), book -> {

|

||||

//这里只做老书更新

|

||||

book.setId(needUpdateBook.getId());

|

||||

book.setWordCount(needUpdateBook.getWordCount());

|

||||

if (needUpdateBook.getPicUrl() != null && needUpdateBook.getPicUrl().contains(Constants.LOCAL_PIC_PREFIX)) {

|

||||

if (needUpdateBook.getPicUrl() != null && needUpdateBook.getPicUrl()

|

||||

.contains(Constants.LOCAL_PIC_PREFIX)) {

|

||||

//本地图片则不更新

|

||||

book.setPicUrl(null);

|

||||

}

|

||||

//查询已存在的章节

|

||||

Map<Integer, BookIndex> existBookIndexMap = bookService.queryExistBookIndexMap(needUpdateBook.getId());

|

||||

Map<Integer, BookIndex> existBookIndexMap = bookService.queryExistBookIndexMap(

|

||||

needUpdateBook.getId());

|

||||

//解析章节目录

|

||||

CrawlParser.parseBookIndexAndContent(needUpdateBook.getCrawlBookId(), book, ruleBean, existBookIndexMap,chapter -> {

|

||||

bookService.updateBookAndIndexAndContent(book, chapter.getBookIndexList(), chapter.getBookContentList(), existBookIndexMap);

|

||||

});

|

||||

crawlParser.parseBookIndexAndContent(needUpdateBook.getCrawlBookId(), book,

|

||||

ruleBean, existBookIndexMap, chapter -> {

|

||||

bookService.updateBookAndIndexAndContent(book, chapter.getBookIndexList(),

|

||||

chapter.getBookContentList(), existBookIndexMap);

|

||||

});

|

||||

});

|

||||

} catch (Exception e) {

|

||||

log.error(e.getMessage(), e);

|

||||

@ -88,7 +96,6 @@ public class StarterListener implements ServletContextListener {

|

||||

|

||||

}

|

||||

|

||||

|

||||

new Thread(() -> {

|

||||

log.info("程序启动,开始执行单本采集任务线程。。。");

|

||||

while (true) {

|

||||

@ -103,7 +110,8 @@ public class StarterListener implements ServletContextListener {

|

||||

CrawlSource source = crawlService.queryCrawlSource(task.getSourceId());

|

||||

RuleBean ruleBean = new ObjectMapper().readValue(source.getCrawlRule(), RuleBean.class);

|

||||

|

||||

if (crawlService.parseBookAndSave(task.getCatId(), ruleBean, task.getSourceId(), task.getSourceBookId())) {

|

||||

if (crawlService.parseBookAndSave(task.getCatId(), ruleBean, task.getSourceId(),

|

||||

task.getSourceBookId())) {

|

||||

//采集成功

|

||||

crawlStatus = 1;

|

||||

}

|

||||

|

||||

@ -2,17 +2,11 @@ package com.java2nb.novel.service.impl;

|

||||

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import com.github.pagehelper.PageHelper;

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

import com.java2nb.novel.core.cache.CacheKey;

|

||||

import com.java2nb.novel.core.cache.CacheService;

|

||||

import com.java2nb.novel.core.crawl.CrawlParser;

|

||||

import com.java2nb.novel.core.crawl.RuleBean;

|

||||

import com.java2nb.novel.core.enums.ResponseStatus;

|

||||

import io.github.xxyopen.model.page.builder.pagehelper.PageBuilder;

|

||||

import io.github.xxyopen.util.IdWorker;

|

||||

import io.github.xxyopen.util.ThreadUtil;

|

||||

import io.github.xxyopen.web.exception.BusinessException;

|

||||

import io.github.xxyopen.web.util.BeanUtil;

|

||||

import com.java2nb.novel.entity.Book;

|

||||

import com.java2nb.novel.entity.CrawlSingleTask;

|

||||

import com.java2nb.novel.entity.CrawlSource;

|

||||

@ -22,8 +16,15 @@ import com.java2nb.novel.mapper.CrawlSourceDynamicSqlSupport;

|

||||

import com.java2nb.novel.mapper.CrawlSourceMapper;

|

||||

import com.java2nb.novel.service.BookService;

|

||||

import com.java2nb.novel.service.CrawlService;

|

||||

import com.java2nb.novel.utils.CrawlHttpClient;

|

||||

import com.java2nb.novel.vo.CrawlSingleTaskVO;

|

||||

import com.java2nb.novel.vo.CrawlSourceVO;

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

import io.github.xxyopen.model.page.builder.pagehelper.PageBuilder;

|

||||

import io.github.xxyopen.util.IdWorker;

|

||||

import io.github.xxyopen.util.ThreadUtil;

|

||||

import io.github.xxyopen.web.exception.BusinessException;

|

||||

import io.github.xxyopen.web.util.BeanUtil;

|

||||

import io.github.xxyopen.web.util.SpringUtil;

|

||||

import lombok.RequiredArgsConstructor;

|

||||

import lombok.SneakyThrows;

|

||||

@ -38,7 +39,6 @@ import java.util.concurrent.atomic.AtomicBoolean;

|

||||

import java.util.regex.Matcher;

|

||||

import java.util.regex.Pattern;

|

||||

|

||||

import static com.java2nb.novel.core.utils.HttpUtil.getByHttpClientWithChrome;

|

||||

import static com.java2nb.novel.mapper.CrawlSourceDynamicSqlSupport.*;

|

||||

import static org.mybatis.dynamic.sql.SqlBuilder.isEqualTo;

|

||||

import static org.mybatis.dynamic.sql.select.SelectDSL.select;

|

||||

@ -51,6 +51,7 @@ import static org.mybatis.dynamic.sql.select.SelectDSL.select;

|

||||

@Slf4j

|

||||

public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

private final CrawlParser crawlParser;

|

||||

|

||||

private final CrawlSourceMapper crawlSourceMapper;

|

||||

|

||||

@ -62,6 +63,8 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

private final IdWorker idWorker = IdWorker.INSTANCE;

|

||||

|

||||

private final CrawlHttpClient crawlHttpClient;

|

||||

|

||||

|

||||

@Override

|

||||

public void addCrawlSource(CrawlSource source) {

|

||||

@ -71,15 +74,16 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

crawlSourceMapper.insertSelective(source);

|

||||

|

||||

}

|

||||

|

||||

@Override

|

||||

public void updateCrawlSource(CrawlSource source) {

|

||||

if(source.getId()!=null){

|

||||

Optional<CrawlSource> opt=crawlSourceMapper.selectByPrimaryKey(source.getId());

|

||||

if(opt.isPresent()) {

|

||||

CrawlSource crawlSource =opt.get();

|

||||

if (source.getId() != null) {

|

||||

Optional<CrawlSource> opt = crawlSourceMapper.selectByPrimaryKey(source.getId());

|

||||

if (opt.isPresent()) {

|

||||

CrawlSource crawlSource = opt.get();

|

||||

if (crawlSource.getSourceStatus() == (byte) 1) {

|

||||

//关闭

|

||||

openOrCloseCrawl(crawlSource.getId(),(byte)0);

|

||||

openOrCloseCrawl(crawlSource.getId(), (byte) 0);

|

||||

}

|

||||

Date currentDate = new Date();

|

||||

crawlSource.setUpdateTime(currentDate);

|

||||

@ -89,14 +93,15 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@Override

|

||||

public PageBean<CrawlSource> listCrawlByPage(int page, int pageSize) {

|

||||

PageHelper.startPage(page, pageSize);

|

||||

SelectStatementProvider render = select(id, sourceName, sourceStatus, createTime, updateTime)

|

||||

.from(crawlSource)

|

||||

.orderBy(updateTime)

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

.from(crawlSource)

|

||||

.orderBy(updateTime)

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

List<CrawlSource> crawlSources = crawlSourceMapper.selectMany(render);

|

||||

PageBean<CrawlSource> pageBean = PageBuilder.build(crawlSources);

|

||||

pageBean.setList(BeanUtil.copyList(crawlSources, CrawlSourceVO.class));

|

||||

@ -113,7 +118,8 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

if (sourceStatus == (byte) 0) {

|

||||

//关闭,直接修改数据库状态,并直接修改数据库状态后获取该爬虫正在运行的线程集合全部停止

|

||||

SpringUtil.getBean(CrawlService.class).updateCrawlSourceStatus(sourceId, sourceStatus);

|

||||

Set<Long> runningCrawlThreadId = (Set<Long>) cacheService.getObject(CacheKey.RUNNING_CRAWL_THREAD_KEY_PREFIX + sourceId);

|

||||

Set<Long> runningCrawlThreadId = (Set<Long>) cacheService.getObject(

|

||||

CacheKey.RUNNING_CRAWL_THREAD_KEY_PREFIX + sourceId);

|

||||

if (runningCrawlThreadId != null) {

|

||||

for (Long ThreadId : runningCrawlThreadId) {

|

||||

Thread thread = ThreadUtil.findThread(ThreadId);

|

||||

@ -157,11 +163,12 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

@Override

|

||||

public CrawlSource queryCrawlSource(Integer sourceId) {

|

||||

SelectStatementProvider render = select(CrawlSourceDynamicSqlSupport.sourceStatus, CrawlSourceDynamicSqlSupport.crawlRule)

|

||||

.from(crawlSource)

|

||||

.where(id, isEqualTo(sourceId))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

SelectStatementProvider render = select(CrawlSourceDynamicSqlSupport.sourceStatus,

|

||||

CrawlSourceDynamicSqlSupport.crawlRule)

|

||||

.from(crawlSource)

|

||||

.where(id, isEqualTo(sourceId))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

return crawlSourceMapper.selectMany(render).get(0);

|

||||

}

|

||||

|

||||

@ -182,10 +189,10 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

public PageBean<CrawlSingleTask> listCrawlSingleTaskByPage(int page, int pageSize) {

|

||||

PageHelper.startPage(page, pageSize);

|

||||

SelectStatementProvider render = select(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask.allColumns())

|

||||

.from(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask)

|

||||

.orderBy(CrawlSingleTaskDynamicSqlSupport.createTime.descending())

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

.from(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask)

|

||||

.orderBy(CrawlSingleTaskDynamicSqlSupport.createTime.descending())

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

List<CrawlSingleTask> crawlSingleTasks = crawlSingleTaskMapper.selectMany(render);

|

||||

PageBean<CrawlSingleTask> pageBean = PageBuilder.build(crawlSingleTasks);

|

||||

pageBean.setList(BeanUtil.copyList(crawlSingleTasks, CrawlSingleTaskVO.class));

|

||||

@ -200,7 +207,8 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

@Override

|

||||

public CrawlSingleTask getCrawlSingleTask() {

|

||||

|

||||

List<CrawlSingleTask> list = crawlSingleTaskMapper.selectMany(select(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask.allColumns())

|

||||

List<CrawlSingleTask> list = crawlSingleTaskMapper.selectMany(

|

||||

select(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask.allColumns())

|

||||

.from(CrawlSingleTaskDynamicSqlSupport.crawlSingleTask)

|

||||

.where(CrawlSingleTaskDynamicSqlSupport.taskStatus, isEqualTo((byte) 2))

|

||||

.orderBy(CrawlSingleTaskDynamicSqlSupport.createTime)

|

||||

@ -226,12 +234,12 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

@Override

|

||||

public CrawlSource getCrawlSource(Integer id) {

|

||||

Optional<CrawlSource> opt=crawlSourceMapper.selectByPrimaryKey(id);

|

||||

if(opt.isPresent()) {

|

||||

CrawlSource crawlSource =opt.get();

|

||||

return crawlSource;

|

||||

}

|

||||

return null;

|

||||

Optional<CrawlSource> opt = crawlSourceMapper.selectByPrimaryKey(id);

|

||||

if (opt.isPresent()) {

|

||||

CrawlSource crawlSource = opt.get();

|

||||

return crawlSource;

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

/**

|

||||

@ -251,10 +259,10 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

if (StringUtils.isNotBlank(ruleBean.getCatIdRule().get("catId" + catId))) {

|

||||

//拼接分类URL

|

||||

String catBookListUrl = ruleBean.getBookListUrl()

|

||||

.replace("{catId}", ruleBean.getCatIdRule().get("catId" + catId))

|

||||

.replace("{page}", page + "");

|

||||

.replace("{catId}", ruleBean.getCatIdRule().get("catId" + catId))

|

||||

.replace("{page}", page + "");

|

||||

|

||||

String bookListHtml = getByHttpClientWithChrome(catBookListUrl);

|

||||

String bookListHtml = crawlHttpClient.get(catBookListUrl);

|

||||

if (bookListHtml != null) {

|

||||

Pattern bookIdPatten = Pattern.compile(ruleBean.getBookIdPatten());

|

||||

Matcher bookIdMatcher = bookIdPatten.matcher(bookListHtml);

|

||||

@ -268,14 +276,12 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

return;

|

||||

}

|

||||

|

||||

|

||||

String bookId = bookIdMatcher.group(1);

|

||||

parseBookAndSave(catId, ruleBean, sourceId, bookId);

|

||||

} catch (Exception e) {

|

||||

log.error(e.getMessage(), e);

|

||||

}

|

||||

|

||||

|

||||

isFindBookId = bookIdMatcher.find();

|

||||

}

|

||||

|

||||

@ -306,7 +312,7 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

final AtomicBoolean parseResult = new AtomicBoolean(false);

|

||||

|

||||

CrawlParser.parseBook(ruleBean, bookId, book -> {

|

||||

crawlParser.parseBook(ruleBean, bookId, book -> {

|

||||

if (book.getBookName() == null || book.getAuthorName() == null) {

|

||||

return;

|

||||

}

|

||||

@ -330,9 +336,11 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

book.setCrawlLastTime(new Date());

|

||||

book.setId(idWorker.nextId());

|

||||

//解析章节目录

|

||||

boolean parseIndexContentResult = CrawlParser.parseBookIndexAndContent(bookId, book, ruleBean, new HashMap<>(0), chapter -> {

|

||||

bookService.saveBookAndIndexAndContent(book, chapter.getBookIndexList(), chapter.getBookContentList());

|

||||

});

|

||||

boolean parseIndexContentResult = crawlParser.parseBookIndexAndContent(bookId, book, ruleBean,

|

||||

new HashMap<>(0), chapter -> {

|

||||

bookService.saveBookAndIndexAndContent(book, chapter.getBookIndexList(),

|

||||

chapter.getBookContentList());

|

||||

});

|

||||

parseResult.set(parseIndexContentResult);

|

||||

|

||||

} else {

|

||||

@ -356,11 +364,12 @@ public class CrawlServiceImpl implements CrawlService {

|

||||

|

||||

@Override

|

||||

public List<CrawlSource> queryCrawlSourceByStatus(Byte sourceStatus) {

|

||||

SelectStatementProvider render = select(CrawlSourceDynamicSqlSupport.id, CrawlSourceDynamicSqlSupport.sourceStatus, CrawlSourceDynamicSqlSupport.crawlRule)

|

||||

.from(crawlSource)

|

||||

.where(CrawlSourceDynamicSqlSupport.sourceStatus, isEqualTo(sourceStatus))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

SelectStatementProvider render = select(CrawlSourceDynamicSqlSupport.id,

|

||||

CrawlSourceDynamicSqlSupport.sourceStatus, CrawlSourceDynamicSqlSupport.crawlRule)

|

||||

.from(crawlSource)

|

||||

.where(CrawlSourceDynamicSqlSupport.sourceStatus, isEqualTo(sourceStatus))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3);

|

||||

return crawlSourceMapper.selectMany(render);

|

||||

}

|

||||

}

|

||||

|

||||

@ -7,7 +7,7 @@ public class Constants {

|

||||

|

||||

/**

|

||||

* 本地图片保存前缀

|

||||

* */

|

||||

*/

|

||||

public static final String LOCAL_PIC_PREFIX = "/localPic/";

|

||||

|

||||

/**

|

||||

@ -23,5 +23,5 @@ public class Constants {

|

||||

/**

|

||||

* 爬取小说http请求失败重试次数

|

||||

*/

|

||||

public static final Integer HTTP_FAIL_RETRY_COUNT = 5;

|

||||

public static final Integer HTTP_FAIL_RETRY_COUNT = 3;

|

||||

}

|

||||

|

||||

@ -0,0 +1,57 @@

|

||||

package com.java2nb.novel.utils;

|

||||

|

||||

import com.java2nb.novel.core.utils.HttpUtil;

|

||||

import lombok.extern.slf4j.Slf4j;

|

||||

import org.springframework.beans.factory.annotation.Value;

|

||||

import org.springframework.stereotype.Component;

|

||||

|

||||

import java.util.Objects;

|

||||

import java.util.Random;

|

||||

|

||||

/**

|

||||

* @author Administrator

|

||||

*/

|

||||

@Slf4j

|

||||

@Component

|

||||

public class CrawlHttpClient {

|

||||

|

||||

@Value("${crawl.interval.min}")

|

||||

private Integer intervalMin;

|

||||

|

||||

@Value("${crawl.interval.max}")

|

||||

private Integer intervalMax;

|

||||

|

||||

private final Random random = new Random();

|

||||

|

||||

private static final ThreadLocal<Integer> RETRY_COUNT = new ThreadLocal<>();

|

||||

|

||||

public String get(String url) {

|

||||

if (Objects.nonNull(intervalMin) && Objects.nonNull(intervalMax) && intervalMax > intervalMin) {

|

||||

try {

|

||||

Thread.sleep(random.nextInt(intervalMax - intervalMin + 1) + intervalMin);

|

||||

} catch (InterruptedException e) {

|

||||

log.error(e.getMessage(), e);

|

||||

}

|

||||

}

|

||||

String body = HttpUtil.getByHttpClientWithChrome(url);

|

||||

if (Objects.isNull(body) || body.length() < Constants.INVALID_HTML_LENGTH) {

|

||||

return processErrorHttpResult(url);

|

||||

}

|

||||

//成功获得html内容

|

||||

return body;

|

||||

}

|

||||

|

||||

private String processErrorHttpResult(String url) {

|

||||

Integer count = RETRY_COUNT.get();

|

||||

if (count == null) {

|

||||

count = 0;

|

||||

}

|

||||

if (count < Constants.HTTP_FAIL_RETRY_COUNT) {

|

||||

RETRY_COUNT.set(++count);

|

||||

return get(url);

|

||||

}

|

||||

RETRY_COUNT.remove();

|

||||

return null;

|

||||

}

|

||||

|

||||

}

|

||||

@ -14,12 +14,18 @@ admin:

|

||||

username: admin

|

||||

password: admin

|

||||

|

||||

#爬虫自动更新的线程数

|

||||

#建议小说数量不多或者正在运行新书入库爬虫的情况下设置为1即可

|

||||

#随着小说数量的增多可以逐渐增加,但建议不要超出CPU的线程数

|

||||

|

||||

|

||||

crawl:

|

||||

update:

|

||||

#爬虫自动更新的线程数

|

||||

#建议小说数量不多或者正在运行新书入库爬虫的情况下设置为1即可

|

||||

#随着小说数量的增多可以逐渐增加,但建议不要超出CPU的线程数

|

||||

thread: 1

|

||||

# 采集间隔时间,单位:毫秒

|

||||

interval:

|

||||

min: 300

|

||||

max: 500

|

||||

|

||||

|

||||

|

||||

|

||||

@ -144,6 +144,9 @@

|

||||

示例:<b><script></b>

|

||||

<li><input type="text" id="contentEnd" class="s_input icon_key"

|

||||

placeholder="小说内容结束截取字符串:"></li>

|

||||

示例:<b><div\s+id="content_tip">\s*<b>([^/]+)</b>\s*</div></b>

|

||||

<li><textarea id="filterContent"

|

||||

placeholder="过滤内容(多个内容换行)" rows="5" cols="52"></textarea></li>

|

||||

|

||||

<li><input type="button" onclick="addCrawlSource()" name="btnRegister" value="提交"

|

||||

id="btnRegister" class="btn_red"></li>

|

||||

@ -405,6 +408,9 @@

|

||||

|

||||

crawlRule.contentEnd = contentEnd;

|

||||

|

||||

var filterContent = $("#filterContent").val();

|

||||

crawlRule.filterContent = filterContent;

|

||||

|

||||

|

||||

$.ajax({

|

||||

type: "POST",

|

||||

|

||||

@ -145,6 +145,10 @@

|

||||

示例:<b><script></b>

|

||||

<li><input type="text" id="contentEnd" class="s_input icon_key"

|

||||

placeholder="小说内容结束截取字符串:"></li>

|

||||

示例:<b><div\s+id="content_tip">\s*<b>([^/]+)</b>\s*</div></b>

|

||||

<li><textarea id="filterContent"

|

||||

placeholder="过滤内容(多个内容换行)" rows="5" cols="52"></textarea></li>

|

||||

|

||||

|

||||

<li><input type="button" onclick="updateCrawlSource()" name="btnRegister" value="提交"

|

||||

id="btnRegister" class="btn_red"></li>

|

||||

@ -269,6 +273,7 @@

|

||||

$("#bookContentUrl").val(crawlRule.bookContentUrl);

|

||||

$("#contentStart").val(crawlRule.contentStart);

|

||||

$("#contentEnd").val(crawlRule.contentEnd);

|

||||

$("#filterContent").val(crawlRule.filterContent);

|

||||

|

||||

}

|

||||

}

|

||||

@ -488,6 +493,9 @@

|

||||

|

||||

crawlRule.contentEnd = contentEnd;

|

||||

|

||||

var filterContent = $("#filterContent").val();

|

||||

crawlRule.filterContent = filterContent;

|

||||

|

||||

|

||||

$.ajax({

|

||||

type: "POST",

|

||||

|

||||

@ -5,7 +5,7 @@

|

||||

<parent>

|

||||

<artifactId>novel</artifactId>

|

||||

<groupId>com.java2nb</groupId>

|

||||

<version>4.3.0-RC1</version>

|

||||

<version>4.4.0</version>

|

||||

</parent>

|

||||

<modelVersion>4.0.0</modelVersion>

|

||||

|

||||

|

||||

@ -3,7 +3,9 @@ package com.java2nb.novel.core.schedule;

|

||||

|

||||

import com.java2nb.novel.core.config.AuthorIncomeProperties;

|

||||

import com.java2nb.novel.core.utils.DateUtil;

|

||||

import com.java2nb.novel.entity.*;

|

||||

import com.java2nb.novel.entity.Author;

|

||||

import com.java2nb.novel.entity.AuthorIncome;

|

||||

import com.java2nb.novel.entity.Book;

|

||||

import com.java2nb.novel.service.AuthorService;

|

||||

import com.java2nb.novel.service.BookService;

|

||||

import lombok.RequiredArgsConstructor;

|

||||

@ -64,24 +66,23 @@ public class MonthIncomeStaSchedule {

|

||||

|

||||

Long bookId = book.getId();

|

||||

|

||||

|

||||

//3.月收入数据未统计入库,分作品统计数据入库

|

||||

Long monthIncome = authorService.queryTotalAccount(userId, bookId, startTime, endTime);

|

||||

|

||||

BigDecimal monthIncomeShare = new BigDecimal(monthIncome)

|

||||

.multiply(authorIncomeConfig.getShareProportion());

|

||||

.multiply(authorIncomeConfig.getShareProportion());

|

||||

long preTaxIncome = monthIncomeShare

|

||||

.multiply(authorIncomeConfig.getExchangeProportion())

|

||||

.multiply(new BigDecimal(100))

|

||||

.longValue();

|

||||

.multiply(authorIncomeConfig.getExchangeProportion())

|

||||

.multiply(new BigDecimal(100))

|

||||

.longValue();

|

||||

|

||||

totalPreTaxIncome += preTaxIncome;

|

||||

|

||||

long afterTaxIncome = monthIncomeShare

|

||||

.multiply(authorIncomeConfig.getTaxRate())

|

||||

.multiply(authorIncomeConfig.getExchangeProportion())

|

||||

.multiply(new BigDecimal(100))

|

||||

.longValue();

|

||||

.multiply(authorIncomeConfig.getTaxRate())

|

||||

.multiply(authorIncomeConfig.getExchangeProportion())

|

||||

.multiply(new BigDecimal(100))

|

||||

.longValue();

|

||||

|

||||

totalAfterTaxIncome += afterTaxIncome;

|

||||

|

||||

@ -102,7 +103,7 @@ public class MonthIncomeStaSchedule {

|

||||

|

||||

}

|

||||

|

||||

if (totalPreTaxIncome > 0 && !authorService.queryIsStatisticsMonth(0L, endTime)) {

|

||||

if (totalPreTaxIncome > 0 && !authorService.queryIsStatisticsMonth(authorId, 0L, endTime)) {

|

||||

|

||||

AuthorIncome authorIncome = new AuthorIncome();

|

||||

authorIncome.setAuthorId(authorId);

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

package com.java2nb.novel.service;

|

||||

|

||||

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

import com.java2nb.novel.entity.Author;

|

||||

import com.java2nb.novel.entity.AuthorIncome;

|

||||

import com.java2nb.novel.entity.AuthorIncomeDetail;

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

|

||||

import java.util.Date;

|

||||

import java.util.List;

|

||||

@ -16,45 +16,51 @@ public interface AuthorService {

|

||||

|

||||

/**

|

||||

* 校验笔名是否存在

|

||||

*

|

||||

* @param penName 校验的笔名

|

||||

* @return true:存在该笔名,false: 不存在该笔名

|

||||

* */

|

||||

*/

|

||||

Boolean checkPenName(String penName);

|

||||

|

||||

/**

|

||||

* 作家注册

|

||||

*

|

||||

* @param userId 注册用户ID

|

||||

*@param author 注册信息

|

||||

* @param author 注册信息

|

||||

* @return 返回错误信息

|

||||

* */

|

||||

*/

|

||||

String register(Long userId, Author author);

|

||||

|

||||

/**

|

||||

* 判断是否是作家

|

||||

*

|

||||

* @param userId 用户ID

|

||||

* @return true:是作家,false: 不是作家

|

||||

* */

|

||||

*/

|

||||

Boolean isAuthor(Long userId);

|

||||

|

||||

/**

|

||||

* 查询作家信息

|

||||

*

|

||||

* @param userId 用户ID

|

||||

* @return 作家对象

|

||||

* */

|

||||

*/

|

||||

Author queryAuthor(Long userId);

|

||||

|

||||

/**

|

||||

* 查询作家列表

|

||||

* @return 作家列表

|

||||

* @param limit 查询条数

|

||||

*

|

||||

* @param limit 查询条数

|

||||

* @param maxAuthorCreateTime 最大申请时间

|

||||

* @return 作家列表

|

||||

*/

|

||||

List<Author> queryAuthorList(int limit, Date maxAuthorCreateTime);

|

||||

|

||||

/**

|

||||

* 查询收入日统计是否入库

|

||||

*

|

||||

* @param bookId 作品ID

|

||||

* @param date 收入时间

|

||||

* @param date 收入时间

|

||||

* @return true:已入库,false:未入库

|

||||

*/

|

||||

boolean queryIsStatisticsDaily(Long bookId, Date date);

|

||||

@ -62,67 +68,75 @@ public interface AuthorService {

|

||||

|

||||

/**

|

||||

* 保存日收入统计(按作品)

|

||||

*

|

||||

* @param authorIncomeDetail 收入详情

|

||||

* */

|

||||

*/

|

||||

void saveDailyIncomeSta(AuthorIncomeDetail authorIncomeDetail);

|

||||

|

||||

|

||||

|

||||

/**

|

||||

* 查询月收入统计是否入库

|

||||

* @param bookId 作品ID

|

||||

*

|

||||

* @param bookId 作品ID

|

||||

* @param incomeDate 收入时间

|

||||

* @return true:已入库,false:未入库

|

||||

* */

|

||||

*/

|

||||

boolean queryIsStatisticsMonth(Long bookId, Date incomeDate);

|

||||

|

||||

boolean queryIsStatisticsMonth(Long authorId, Long bookId, Date incomeDate);

|

||||

|

||||

/**

|

||||

* 查询时间段内总订阅额

|

||||

*

|

||||

* @param userId

|

||||

* @param bookId 作品ID

|

||||

* @param bookId 作品ID

|

||||

* @param startTime 开始时间

|

||||

* @param endTime 结束时间

|

||||

* @param endTime 结束时间

|

||||

* @return 订阅额(屋币)

|

||||

* */

|

||||

*/

|

||||

Long queryTotalAccount(Long userId, Long bookId, Date startTime, Date endTime);

|

||||

|

||||

|

||||

/**

|

||||

* 保存月收入统计

|

||||

*

|

||||

* @param authorIncome 收入详情

|

||||

* */

|

||||

*/

|

||||

void saveAuthorIncomeSta(AuthorIncome authorIncome);

|

||||

|

||||

/**

|

||||

* 查询收入日统计是否入库

|

||||

*

|

||||

* @param authorId 作家ID

|

||||

* @param bookId 作品ID

|

||||

* @param date 收入时间

|

||||

* @param bookId 作品ID

|

||||

* @param date 收入时间

|

||||

* @return true:已入库,false:未入库

|

||||

*/

|

||||

boolean queryIsStatisticsDaily(Long authorId, Long bookId, Date date);

|

||||

|

||||

/**

|

||||

*作家日收入统计数据分页列表查询

|

||||

* 作家日收入统计数据分页列表查询

|

||||

*

|

||||

* @param userId

|

||||

* @param page 页码

|

||||

* @param pageSize 分页大小

|

||||

* @param bookId 小说ID

|

||||

* @param page 页码

|

||||

* @param pageSize 分页大小

|

||||

* @param bookId 小说ID

|

||||

* @param startTime 开始时间

|

||||

* @param endTime 结束时间

|

||||

* @param endTime 结束时间

|

||||

* @return 日收入统计数据分页数据

|

||||

*/

|

||||

PageBean<AuthorIncomeDetail> listIncomeDailyByPage(int page, int pageSize, Long userId, Long bookId, Date startTime, Date endTime);

|

||||

PageBean<AuthorIncomeDetail> listIncomeDailyByPage(int page, int pageSize, Long userId, Long bookId, Date startTime,

|

||||

Date endTime);

|

||||

|

||||

|

||||

/**

|

||||

* 作家月收入统计数据分页列表查询

|

||||

* @param page 页码

|

||||

*

|

||||

* @param page 页码

|

||||

* @param pageSize 分页大小

|

||||

* @param userId 用户ID

|

||||

* @param bookId 小说ID

|

||||

* @param userId 用户ID

|

||||

* @param bookId 小说ID

|

||||

* @return 分页数据

|

||||

* */

|

||||

*/

|

||||

PageBean<AuthorIncome> listIncomeMonthByPage(int page, int pageSize, Long userId, Long bookId);

|

||||

}

|

||||

|

||||

@ -1,23 +1,15 @@

|

||||

package com.java2nb.novel.service.impl;

|

||||

|

||||

import com.github.pagehelper.PageHelper;

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

import com.java2nb.novel.core.cache.CacheKey;

|

||||

import com.java2nb.novel.core.cache.CacheService;

|

||||

import com.java2nb.novel.core.enums.ResponseStatus;

|

||||

import io.github.xxyopen.model.page.builder.pagehelper.PageBuilder;

|

||||

import io.github.xxyopen.web.exception.BusinessException;

|

||||

import com.java2nb.novel.entity.Author;

|

||||

import com.java2nb.novel.entity.AuthorIncome;

|

||||

import com.java2nb.novel.entity.AuthorIncomeDetail;

|

||||

import com.java2nb.novel.entity.FriendLink;

|

||||

import com.java2nb.novel.mapper.*;

|

||||

import com.java2nb.novel.service.AuthorService;

|

||||

import com.java2nb.novel.service.FriendLinkService;

|

||||

import io.github.xxyopen.model.page.PageBean;

|

||||

import io.github.xxyopen.model.page.builder.pagehelper.PageBuilder;

|

||||

import lombok.RequiredArgsConstructor;

|

||||

import org.mybatis.dynamic.sql.render.RenderingStrategies;

|

||||

import org.mybatis.dynamic.sql.select.CountDSLCompleter;

|

||||

import org.mybatis.dynamic.sql.select.render.SelectStatementProvider;

|

||||

import org.springframework.stereotype.Service;

|

||||

import org.springframework.transaction.annotation.Transactional;

|

||||

|

||||

@ -25,10 +17,6 @@ import java.util.Date;

|

||||

import java.util.List;

|

||||

|

||||

import static com.java2nb.novel.mapper.AuthorCodeDynamicSqlSupport.authorCode;

|

||||

import static com.java2nb.novel.mapper.BookDynamicSqlSupport.book;

|

||||

import static com.java2nb.novel.mapper.BookDynamicSqlSupport.id;

|

||||

import static com.java2nb.novel.mapper.BookDynamicSqlSupport.updateTime;

|

||||

import static com.java2nb.novel.mapper.FriendLinkDynamicSqlSupport.*;

|

||||

import static org.mybatis.dynamic.sql.SqlBuilder.*;

|

||||

import static org.mybatis.dynamic.sql.select.SelectDSL.select;

|

||||

|

||||

@ -52,7 +40,7 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

@Override

|

||||

public Boolean checkPenName(String penName) {

|

||||

return authorMapper.count(c ->

|

||||

c.where(AuthorDynamicSqlSupport.penName, isEqualTo(penName))) > 0;

|

||||

c.where(AuthorDynamicSqlSupport.penName, isEqualTo(penName))) > 0;

|

||||

}

|

||||

|

||||

@Transactional(rollbackFor = Exception.class)

|

||||

@ -61,21 +49,21 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

Date currentDate = new Date();

|

||||

//判断邀请码是否有效

|

||||

if (authorCodeMapper.count(c ->

|

||||

c.where(AuthorCodeDynamicSqlSupport.inviteCode, isEqualTo(author.getInviteCode()))

|

||||

.and(AuthorCodeDynamicSqlSupport.isUse, isEqualTo((byte) 0))

|

||||

.and(AuthorCodeDynamicSqlSupport.validityTime, isGreaterThan(currentDate))) > 0) {

|

||||

//邀请码有效

|

||||

c.where(AuthorCodeDynamicSqlSupport.inviteCode, isEqualTo(author.getInviteCode()))

|

||||

.and(AuthorCodeDynamicSqlSupport.isUse, isEqualTo((byte) 0))

|

||||

.and(AuthorCodeDynamicSqlSupport.validityTime, isGreaterThan(currentDate))) > 0) {

|

||||

//邀请码有效

|

||||

//保存作家信息

|

||||

author.setUserId(userId);

|

||||

author.setCreateTime(currentDate);

|

||||

authorMapper.insertSelective(author);

|

||||

//设置邀请码状态为已使用

|

||||

authorCodeMapper.update(update(authorCode)

|

||||

.set(AuthorCodeDynamicSqlSupport.isUse)

|

||||

.equalTo((byte) 1)

|

||||

.where(AuthorCodeDynamicSqlSupport.inviteCode,isEqualTo(author.getInviteCode()))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3));

|

||||

.set(AuthorCodeDynamicSqlSupport.isUse)

|

||||

.equalTo((byte) 1)

|

||||

.where(AuthorCodeDynamicSqlSupport.inviteCode, isEqualTo(author.getInviteCode()))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3));

|

||||

return "";

|

||||

} else {

|

||||

//邀请码无效

|

||||

@ -87,15 +75,15 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

@Override

|

||||

public Boolean isAuthor(Long userId) {

|

||||

return authorMapper.count(c ->

|

||||

c.where(AuthorDynamicSqlSupport.userId, isEqualTo(userId))) > 0;

|

||||

c.where(AuthorDynamicSqlSupport.userId, isEqualTo(userId))) > 0;

|

||||

}

|

||||

|

||||

@Override

|

||||

public Author queryAuthor(Long userId) {

|

||||

return authorMapper.selectMany(

|

||||

select(AuthorDynamicSqlSupport.id,AuthorDynamicSqlSupport.penName,AuthorDynamicSqlSupport.status)

|

||||

.from(AuthorDynamicSqlSupport.author)

|

||||

.where(AuthorDynamicSqlSupport.userId,isEqualTo(userId))

|

||||

select(AuthorDynamicSqlSupport.id, AuthorDynamicSqlSupport.penName, AuthorDynamicSqlSupport.status)

|

||||

.from(AuthorDynamicSqlSupport.author)

|

||||

.where(AuthorDynamicSqlSupport.userId, isEqualTo(userId))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).get(0);

|

||||

}

|

||||

@ -103,12 +91,12 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

@Override

|

||||

public List<Author> queryAuthorList(int needAuthorNumber, Date maxAuthorCreateTime) {

|

||||

return authorMapper.selectMany(select(AuthorDynamicSqlSupport.id, AuthorDynamicSqlSupport.userId)

|

||||

.from(AuthorDynamicSqlSupport.author)

|

||||

.where(AuthorDynamicSqlSupport.createTime, isLessThan(maxAuthorCreateTime))

|

||||

.orderBy(AuthorDynamicSqlSupport.createTime.descending())

|

||||

.limit(needAuthorNumber)

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3));

|

||||

.from(AuthorDynamicSqlSupport.author)

|

||||

.where(AuthorDynamicSqlSupport.createTime, isLessThan(maxAuthorCreateTime))

|

||||

.orderBy(AuthorDynamicSqlSupport.createTime.descending())

|

||||

.limit(needAuthorNumber)

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3));

|

||||

}

|

||||

|

||||

|

||||

@ -116,11 +104,11 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

public boolean queryIsStatisticsDaily(Long bookId, Date date) {

|

||||

|

||||

return authorIncomeDetailMapper.selectMany(select(AuthorIncomeDetailDynamicSqlSupport.id)

|

||||

.from(AuthorIncomeDetailDynamicSqlSupport.authorIncomeDetail)

|

||||

.where(AuthorIncomeDetailDynamicSqlSupport.bookId, isEqualTo(bookId))

|

||||

.and(AuthorIncomeDetailDynamicSqlSupport.incomeDate, isEqualTo(date))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).size() > 0;

|

||||

.from(AuthorIncomeDetailDynamicSqlSupport.authorIncomeDetail)

|

||||

.where(AuthorIncomeDetailDynamicSqlSupport.bookId, isEqualTo(bookId))

|

||||

.and(AuthorIncomeDetailDynamicSqlSupport.incomeDate, isEqualTo(date))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).size() > 0;

|

||||

|

||||

}

|

||||

|

||||

@ -133,24 +121,35 @@ public class AuthorServiceImpl implements AuthorService {

|

||||

@Override

|

||||

public boolean queryIsStatisticsMonth(Long bookId, Date incomeDate) {

|

||||

return authorIncomeMapper.selectMany(select(AuthorIncomeDynamicSqlSupport.id)

|

||||

.from(AuthorIncomeDynamicSqlSupport.authorIncome)

|

||||

.where(AuthorIncomeDynamicSqlSupport.bookId, isEqualTo(bookId))

|

||||

.and(AuthorIncomeDynamicSqlSupport.incomeMonth, isEqualTo(incomeDate))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).size() > 0;

|

||||

.from(AuthorIncomeDynamicSqlSupport.authorIncome)

|

||||

.where(AuthorIncomeDynamicSqlSupport.bookId, isEqualTo(bookId))

|

||||

.and(AuthorIncomeDynamicSqlSupport.incomeMonth, isEqualTo(incomeDate))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).size() > 0;

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean queryIsStatisticsMonth(Long authorId, Long bookId, Date incomeDate) {

|

||||

return authorIncomeMapper.selectMany(select(AuthorIncomeDynamicSqlSupport.id)

|

||||

.from(AuthorIncomeDynamicSqlSupport.authorIncome)

|

||||

.where(AuthorIncomeDynamicSqlSupport.bookId, isEqualTo(bookId))

|

||||

.and(AuthorIncomeDynamicSqlSupport.authorId, isEqualTo(authorId))

|

||||

.and(AuthorIncomeDynamicSqlSupport.incomeMonth, isEqualTo(incomeDate))

|

||||

.build()

|

||||

.render(RenderingStrategies.MYBATIS3)).size() > 0;

|

||||

}

|

||||

|

||||

@Override

|

||||

public Long queryTotalAccount(Long userId, Long bookId, Date startTime, Date endTime) {

|

||||

|

||||

return authorIncomeDetailMapper.selectStatistic(select(sum(AuthorIncomeDetailDynamicSqlSupport.incomeAccount))

|

||||